A microservice team asked me for help with a mysterious issue. They claimed that the ZFS file system was consuming 30% of CPU capacity. I summarized this case study at Kernel Recipes in 2017; it is an old story that's worth resharing here.

1. Problem Statement

The microservice was for metrics ingestion and had recently updated their base OS image (BaseAMI). After doing so, they claimed that ZFS was now eating over 30% of CPU capacity. My first thought was that they were somehow mistaken: I worked on ZFS internals at Sun Microsystems, and unless it is badly misconfigured there's no way it can consume that much CPU.

I have been surprised many times by unexpected performance issues, so I thought I should check their instances anyway. At the very least, I could show that I took it seriously enough to check it myself. I should also be able to identify the real CPU consumer.

2. Monitoring

I started with the cloud-wide monitoring tool, Atlas, to check high-level CPU metrics. These included a breakdown of CPU time into percentages for "usr" (user: applications) and "sys" (system: the kernel).

I was surprised to find a whopping 38% of CPU time was in sys, which is highly unusual for cloud workloads at Netflix. This supported the claim that ZFS was eating CPU, but how? Surely this is some other kernel activity, and not ZFS.

3. Next Steps

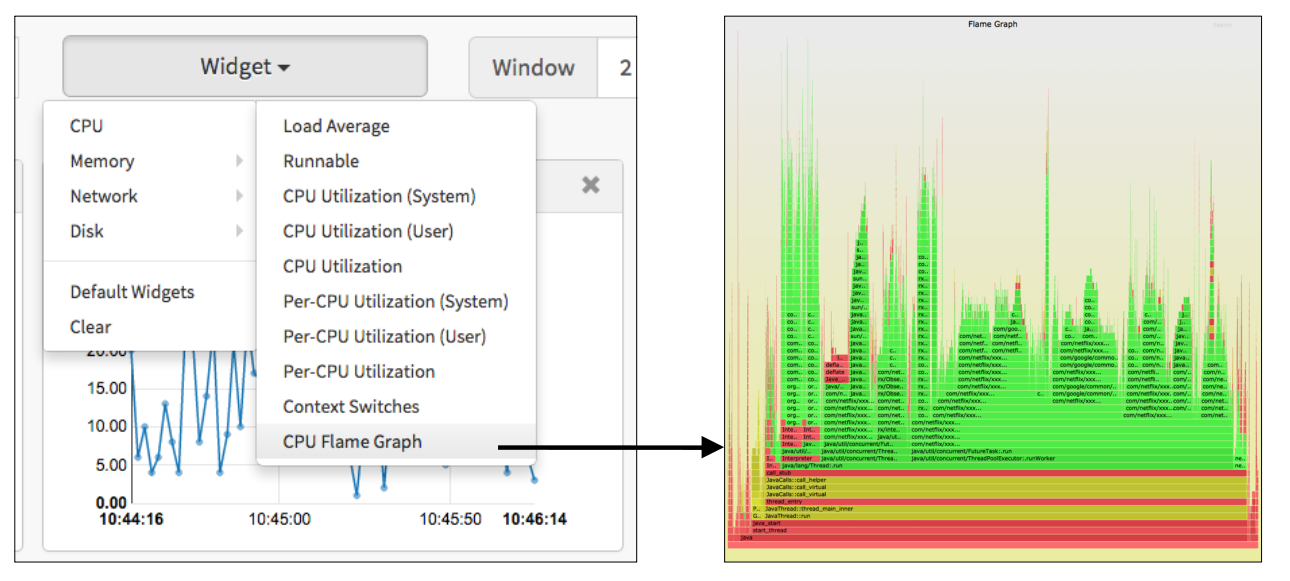

I'd usually SSH to instances for deeper analysis, where I could use mpstat(1) to confirm the usr/sys breakdown and perf(1) to begin profiling on-CPU kernel code paths. But since Netflix has tools (previously Vector, now FlameCommander) that allow us to easily fetch flame graphs from our cloud deployment UI, I thought I'd jump to the chase. Just for illustration, this shows the Vector UI and a typical cloud flame graph:

Note that this sample flame graph is dominated by Java, shown by the green frames.

4. Flame Graph

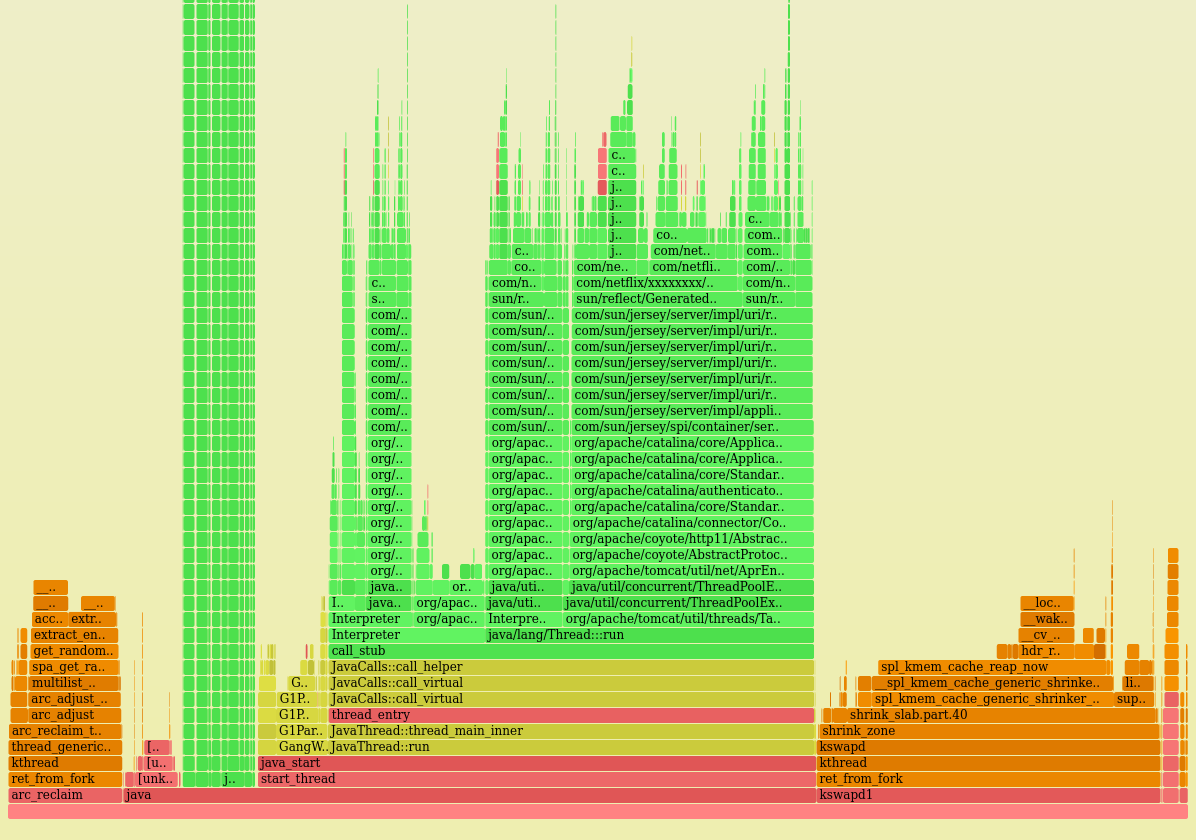

Here's the CPU flame graph from one of the problem instances:

The kernel CPU time pretty obvious, shown as two orange towers on the left and right. (The other colors are yellow for C++, and red for other user-level code.)

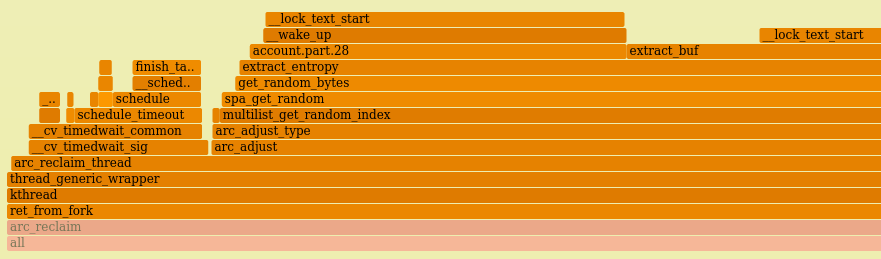

Zooming in to the left kernel tower:

This is arc_reclaim_thread! I worked on this code back at Sun. So this really is ZFS, they were right!

The ZFS Adapative Replacement Cache (ARC) is the main memory cache for the file system. The arc_reclaim_thread runs arc_adjust() to evict memory from the cache to keep it from growing too large, and to maintain a threshold of free memory that applications can quickly use. It does this periodically or when woken up by low memory conditions. In the past I've seen the arc_reclaim_thread eat too much CPU when a tiny file system record size was used (e.g., 512 bytes) creating millions of tiny buffers. But that was basically a misconfiguration. The default size is 128 Kbytes, and people shouldn't be tuning below 8 Kbytes.

The right kernel tower enters spl_kmem_cache_reap_now(), another ZFS memory freeing function. I imagine this is related to the left tower (e.g., contending for the same locks).

But first: Why is ZFS in use?

5. Configuration

There was only one use of ZFS so far at Netflix that I knew of: A new infrastructure project was using ZFS for containers. This led me to a theory: If they were quickly churning through containers, they would also be churning through ZFS file systems, and this might mean that many old pages needed to be cleaned out of the cache. Ahh, it makes sense now.

I told them my theory, confident I was on the right path. But they replied: "We aren't using containers." Ok, then how are you using ZFS? I did not expect their answer:

"We aren't using ZFS."

What!? Yes you are, I can see the arc_reclaim_thread in the flame graph. It doesn't run for fun! It's only on CPU because it's evicting pages from the ZFS ARC. If you aren't using ZFS, there are no pages in the ARC, so it shouldn't run.

They were confident that they weren't using ZFS at all. The flame graph defied logic. I needed to prove to them that they were indeed using ZFS somehow, and figure out why.

6. cd & ls

I should be able to debug this using nothing more than the cd and ls(1) commands. cd to the file system and ls(1) to see what's there. The file names should be a big clue as to its use.

First, finding out where the ZFS file systems are mounted:

df -h mount zfs list

This showed nothing! No ZFS file systems were currently mounted. I tried another instance and saw the same thing. Huh?

Ah, but containers may have been created previously and since destroyed, hence no remaining file systems now. How can I tell if ZFS has ever been used?

7. arcstats

I know, arcstats! The kernel counters that track ZFS statistics, including ARC hits and misses. Viewing them:

# cat /proc/spl/kstat/zfs/arcstats name type data hits 4 0 misses 4 0 demand_data_hits 4 0 demand_data_misses 4 0 demand_metadata_hits 4 0 demand_metadata_misses 4 0 prefetch_data_hits 4 0 prefetch_data_misses 4 0 prefetch_metadata_hits 4 0 prefetch_metadata_misses 4 0 mru_hits 4 0 mru_ghost_hits 4 0 mfu_hits 4 0 mfu_ghost_hits 4 0 deleted 4 0 mutex_miss 4 0 evict_skip 4 0 evict_not_enough 4 0 evict_l2_cached 4 0 evict_l2_eligible 4 0 [...]

Unbelievable! All the counters were zero! ZFS really wasn't in use, ever! But at the same time, it was eating over 30% of CPU capacity! Whaaat??

The customer had been right all along. ZFS was straight up eating CPU, and for no reason.

How can a file system that's not in use at all consume 38% CPU? I'd never seen this before. This was a mystery.

8. Code Analysis

I took a closer look at the flame graph and the paths involved, and saw that the CPU code paths led to get_random_bytes() and extract_entropy(). These were new to me. Browsing the source code and change history I found the culprit.

The ARC contains lists of cached buffers for different memory types. A performance feature ("multilist") had been added that split the ARC lists into one per CPU, to reduce lock contention on multi-CPU systems. Sounds good, as that should improve performance. But what happens when you want to evict some memory? You need to pick one of those CPU lists. Which one? You could go through them in a round-robin fashion, but the developer thought it better to pick one at random.

Cryptographically-secure random.

The kicker was that ZFS wasn't even in use. The ARC was detecting low system memory and then adjusting its size accordingly, at which point it'd discover it was zero size already and didn't need to do anything. But this was after randomly selecting a zero-sized list, using a CPU-expensive random number generator.

I filed this as ZFS issue #6531. I believe the first fix was to have the arc_reclaim_thread bail out earlier if ZFS wasn't in use, and not enter list selection. The ARC has since had many changes, and I haven't heard of this issue again.

Click here for Disqus comments (ad supported).